Maximizing Web Scraping: The Function of Proxy Servers

In the constantly changing landscape of internet data extraction, web scraping has emerged as a effective tool for companies, academic professionals, and advertisers alike. Nonetheless, the challenges of accessing data from different websites can frequently be daunting. This is wherein proxy servers come into play, serving as crucial gatekeepers that not only enable data access but also guarantee anonymity and safety. Grasping the role of proxies in web scraping is essential for anyone looking to boost their data-gathering efforts without hitting snags.

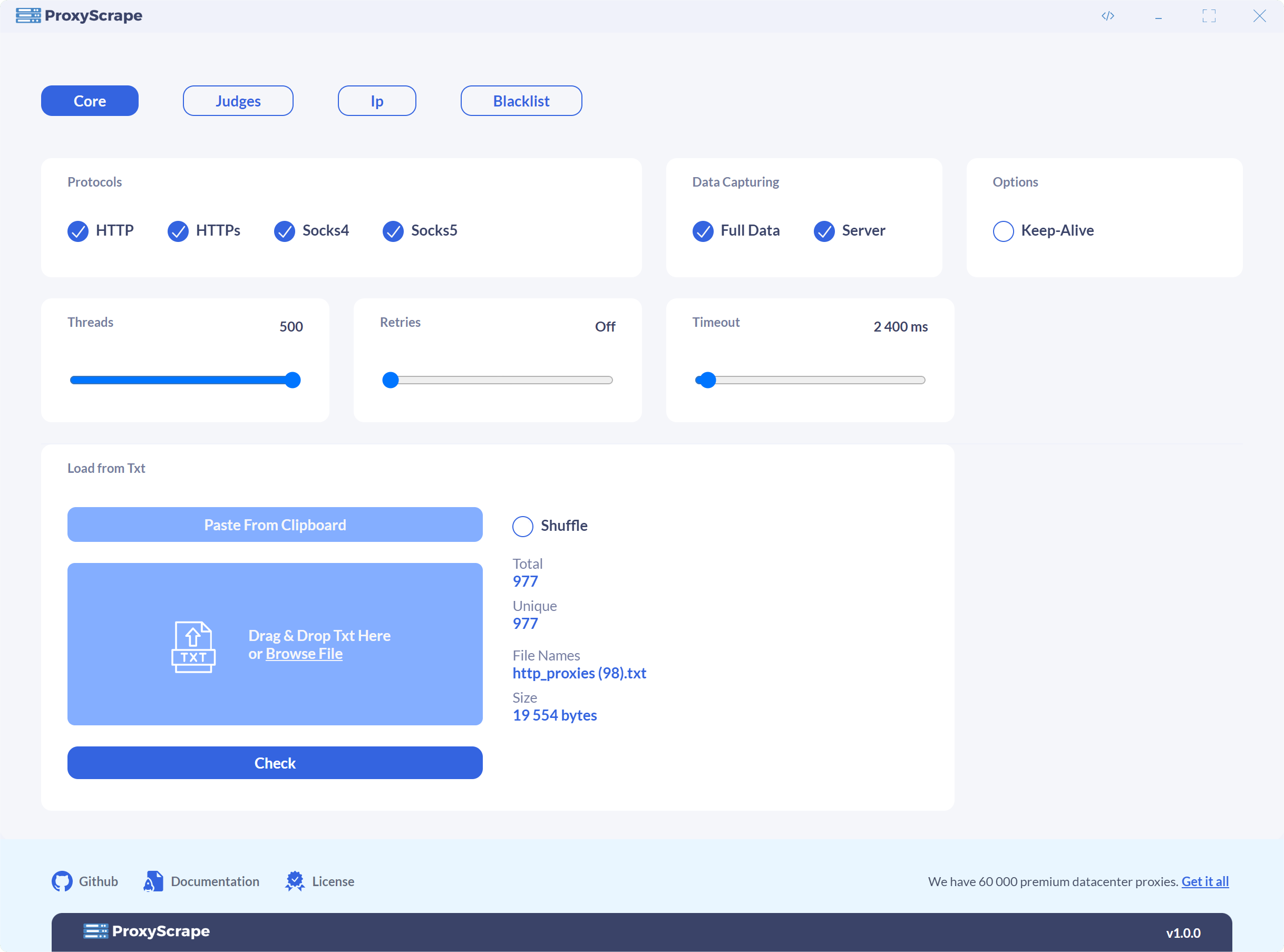

As you explore into the world of proxies, you will encounter different tools and techniques intended to enhance your web scraping experience. From proxy scrapers that gather lists of active proxies to checkers that validate proxies that validate their functionality and speed, knowing how to efficiently utilize these resources can significantly impact the success of your scraping tasks. Whether you are looking for free proxies or considering the benefits of paid options, having a comprehensive understanding of the best proxy sources and testing methods is essential for achieving effective and reliable web scraping.

Understanding Intermediaries in Web Scraping

Proxy servers serve as go-betweens between a user and the web, allowing for more effective and stealthy web scraping. By sending queries through a proxy server, users can conceal their real IPs, which helps avoid discovery and potential preventive measures by web services. This is particularly vital when scraping large amounts of information, as many services have measures in place to restrict robotic queries and safeguard their assets.

Various proxy types exist to suit various scraping needs. HTTP proxy servers are commonly used for web scraping as they perform efficiently with regular web traffic and can process both GET and POST calls. Meanwhile, SOCKS proxies, which operate at a lower level, provide more versatility and can facilitate multiple protocols, including non-HTTP traffic. Knowing the distinctions between these proxies will help scrapers choose the right tool for their specific requirements.

The decision between free and paid proxies is essential in web scraping. Complimentary proxies may be tempting due to their no charge, but they frequently come with drawbacks such as decreased speed, inferior reliability, and potential threats. Paid proxies, on the other hand, tend to offer enhanced speed, concealment, and technical assistance. Therefore, knowing how to find effective proxies and deciding between private or public options can significantly impact the outcome and performance of web scraping projects.

Types of Proxies: HTTP

When it comes to web scraping, understanding the types of proxies available is essential. Hypertext Transfer Protocol proxies work specifically with web traffic, making them suitable for scraping sites that use traditional HTTP protocols. They are ideal for tasks such as accessing web pages, gathering data, and navigating sites that do not require login. However, HTTP proxies can experience difficulty with sites that require secure connections or need additional functionalities.

On the other hand, SOCKS proxies are generally versatile and can manage any type of traffic, whether it is Hypertext Transfer Protocol, FTP, or other protocols. This flexibility means that SOCKS proxies can be used for a wider range of tasks, including transferring files and online gaming, making them a preferred choice for users who require greater anonymity and performance. The two commonly used SOCKS versions, SOCKS4 and SOCKS5, provide even greater options, with SOCKS5 offering enhanced security features such as authentication and UDP support.

Choosing between HTTP and SOCKS proxies ultimately relies on your specific needs. If fastest proxy scraper and checker is to scrape web content efficiently, HTTP proxies may be sufficient. However, for more involved tasks that involve multiple protocols or require a greater level of anonymity, SOCKS proxies are typically the better option. Understanding the differences can greatly impact the outcome of your web scraping endeavors.

Leading Tools for Proxy Server Scraping

When it comes to data extraction using proxies, numerous tools shine for their effectiveness and simplicity of use. A popular choice is ProxyStorm, which provides a strong platform for collecting plus organizing proxy lists. It lets users to quickly extract multiple types of HTTP and SOCKS proxies, meeting diverse web scraping needs. This tool is particularly helpful for those wanting to create comprehensive proxy lists for automating tasks.

For those wanting a no-cost option, a free proxy scraper can be extremely efficient in identifying valid proxies without incurring costs. Many of these tools have built-in features that assess the proxies' reactivity and anonymity levels. By employing these complimentary resources, users can collect a substantial amount of proxies while focusing for quality, which is vital for upholding a effective scraping operation.

One more essential tool is a proxy checker, which holds a vital role in validating scraped proxies. A high-performing proxy checker will quickly recognize which proxies are working and fit for use. It typically evaluates for speed, reliability, and disguise levels, providing users with important insights into their proxy selections. By including a trustworthy proxy verification tool, scrapers can enhance their data extraction processes and raise overall productivity.

Assessing Proxy Server Performance and Speed

As engaging in web scraping, making sure that your proxies are functional and efficient is crucial for smooth data extraction. A trustworthy proxy checker can help confirm if a proxy is operational by dispatching requests and monitoring for responses. Tools like Proxy Checker offer capabilities that aid in this confirmation process. These tools typically check for connectivity success, response time, and up-time, allowing you to remove malfunctioning proxies from your list.

Velocity verification is essential because a slow proxy can greatly impact scraping efficiency. To evaluate proxy speed, one method is to determine the time it takes to receive a response after dispatching a request. Many proxy verification tools feature built-in speed tests, yielding results that indicate the fastest proxies. This way, you can focus on the best-performing options for your web scraping needs, ensuring more rapid data access.

In addition to working and performance checks, verifying proxy obscurity is also essential. There are multiple types of proxies, including Web, SOCKS v4, and SOCKS v5, each fulfilling different purposes. Some verification tools give insights into the degree of anonymity a proxy offers. By confirming whether the proxy reveals your real IP or maintains anonymity, you can improve your ability to select proxies that align with your scraping goals, improving both security and performance.

Finding Reliable Complimentary Proxies

While looking for high-quality no-cost proxies, it’s essential to seek out reliable sources that frequently update their proxy lists. Platforms that dedicate themselves in collecting and distributing complimentary proxies often provide users with a range of options, including HyperText Transfer Protocol and SOCKS-based proxies. It’s important to pick proxies from sites that oversee and remove non-working proxies often, guaranteeing that the list you access remains relevant and operational.

An additional approach is to leverage online forums such as discussion boards and social media pages focused to web scraping. Users of these networks often share their results on effective complimentary proxies, as well as valuable advice on how to test and verify their effectiveness. Connecting with these groups can guide you to discover hidden gems while also keeping informed about plausible risks associated with using public proxies.

When you have compiled a list of no-cost proxies, utilizing a proxy checker tool is essential. These tools allow you to test the speed, privacy, and overall reliability of each proxy. It’s vital to confirm not just whether a proxy functions, but also its capability to address the specific demands of your web scraping tasks, ensuring you optimize efficiency and reduce downtime in your data extraction efforts.

Streamlining with Proxy Servers

Using proxy servers efficiently can greatly enhance the automated process in web scraping. By sending requests through various proxy servers, you can bypass geographical barriers and reduce the chances of being blocked by target websites. This is particularly useful when you're collecting large volumes of data or when your operations require various IP addresses to avoid being identified. Creating a robust proxy management system can help streamline your automation efforts, ensuring that each request is sent via a unique proxy to maintain anonymity.

Incorporating a proxy harvesting tool and proxy checker into your workflow allows you to gather and verify reliable proxies efficiently. With tools like ProxyStorm or various proxy list generators, you can find new proxies that are quick and reliable. Moreover, usage of a high-quality proxy checker ensures that your selected proxies are functional and meet the necessary speed standards for your scraping tasks. Additionally, combining automated proxy verification with scraping routines can save precious time and effort, enabling you to focus on data extraction rather than fixing proxy issues.

It is also essential to know the differences between types of proxies, such as HTTP, SOCKS4, and SOCKS5, as this knowledge can influence your automation strategy. Depending on the nature of the web scraping task, you may choose private proxies for high-security needs or shared proxies for faster access to a wide range of data. By balancing between private and public proxies, while utilizing tools for proxy verification and speed testing, you can establish a more resilient scraping framework that adapts to changing web environments efficiently.

Optimal Practices for Leveraging Proxies

When employing proxies for web scraping, it is important to select the correct type based on your preferences. For example's sake, HTTP proxies are appropriate for standard web browsing and scraping activities, while SOCKS proxies offer greater versatility and can handle different types of traffic. Understanding the differences between HTTP, SOCKS4, and SOCKS5 proxies can help you select the most suitable fit for your individual tasks. Moreover, ensure that you employ a proxy list that is consistently updated to avoid using outdated or unreliable proxies.

An additional strategy is to verify the performance and anonymity of your proxies. Employing tools like a proxy checker can assist in testing proxy performance and ensuring your anonymity is protected while scraping. Adding a proxy verification tool or a fast proxy checker into your scraping workflow can save you time by filtering out slow or non-anonymous proxies before you start your scraping sessions. This helps maintain efficiency and prevent any disruptions during the data extraction processes.

In conclusion, it is crucial to rotate your proxies regularly to prevent bans and sustain access to the websites you are scraping. Employing a private proxy pool can help eliminate issues related to IP blocking, as these proxies are more unlikely to be flagged compared to public proxies. Furthermore, using a proxy scraper can help you in sourcing quality proxies tailored to your scraping requirements. Merging these practices ensures a seamless scraping experience while enhancing your data extraction efforts.